If you’ve got a WordPress web page, you might have encountered a robots.txt file. Did you know what it was? It’s software that helps by limiting and limiting website access.

The more you know about how to read the robots.txt files, the more transparent its value is for you. In addition, you’ll realize that you can include robots.txt onto the majority of WordPress websites, which allows you to set the rules of your choice.

The Robots txt file is in WP

“The “robots” mentioned in the name refers to “bots” which crawl the internet. Most well-known are the ones used by search engines to rank and index websites.

These bots aid your website to rank higher on a SERP. However, this doesn’t mean they have access to your site. This was identified as a concern in the mid-1990s, and researchers created the robots exclusion standards.

Robots.txt is the model for these standards, as it allows webmasters to specify how their bots interact with their sites.

With the help of robots.txt, webmasters can block bots from interfacing with their sites. In addition, they can restrict access to specific areas of the site.

It is important to remember that robots.txt can only be effective for “participating” bots. It is not able to force bots to obey the rules. If a bot that is malicious arrives, it will disregard it and ignore the robots.txt files and rules.

Even benign bots can be able to ignore robots.txt rules. For instance, bots from Google do not respect rules that restrict the number of times they are allowed to visit a specific site.

Does Robots txt file initial for my WordPress website?

Webmasters can benefit from the use of a robots.txt file since it tells crawlers of search engines which pages on the site to pay attention to for indexing. This ensures that the most important websites are noticed while lesser important pages are not noticed. A good set of rules can keep bots from using up the server resources of your site.

How to modify It on WordPress

When you make your WordPress website, it will include it’s a Virtual robots.txt file is created. Since it’s a virtual file, it isn’t editable. If you’d like to modify it, you’ll need to create physically-based files.

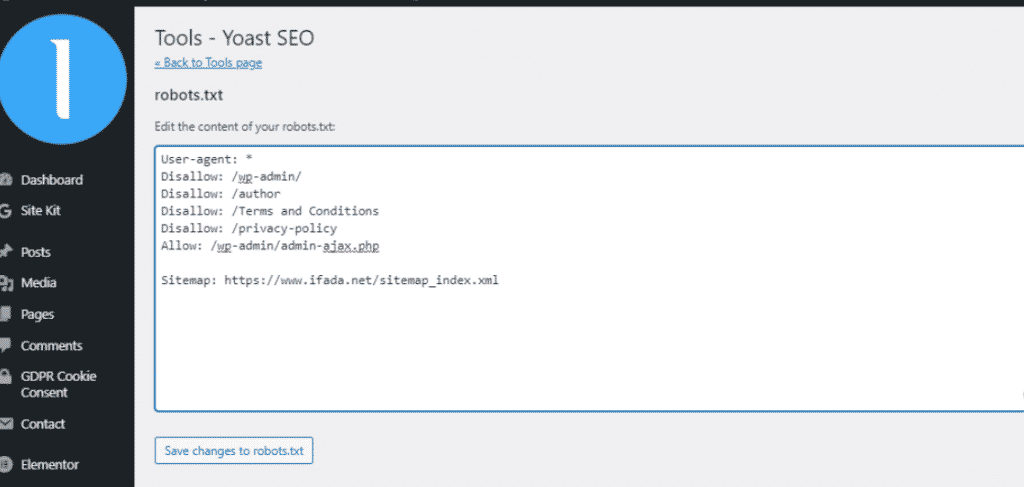

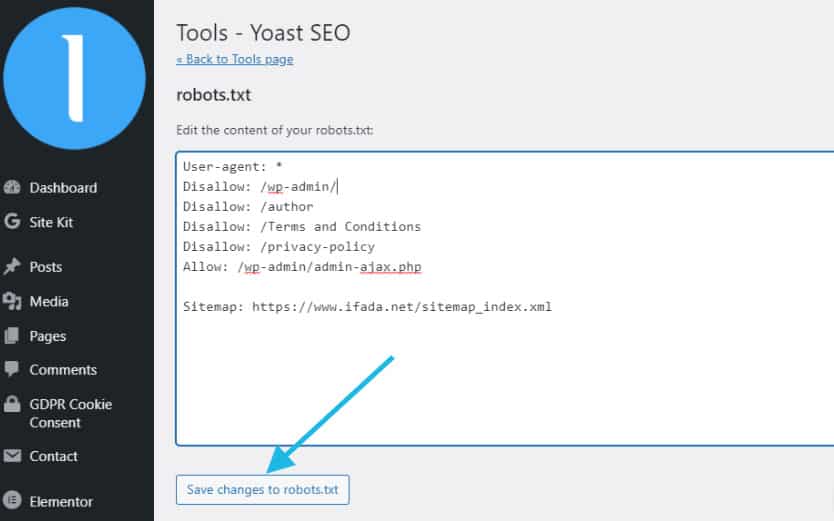

Utilizing the Yoast SEO plugin is a method of achieving this. Invoke the advanced features in Yoast when you’re using WordPress by going to SEO Dashboard, Features, and Feature. Switch on to the Advanced Settings pages.

On the left side of your WP Dashboard, you will find “Y SEO” (the icon of the Yoast plugin) Select Tools and select File Editor. The best of the options available under this menu is to create a Robot.txt file.

Users who prefer using the All In One SEO Pack plugin can simply go to the Feature Manager and then activate this plugin. Robots.txt file.

Even people who don’t have an SEO plugin can utilize SFTP to create the physical robots.txt file. Simply create a robot.txt file using the text editor before connecting to the site via SFTP for uploading the robot.txt file to the website’s root folder.

Two commands reside at heart in the robots.txt file. The first one is “user-agent,” which is the command that allows users to target specific bots. The other is “disallow,” which informs bots who are visiting that they should not access specific websites.

Suppose you were to stop a specific web bot from accessing the site. To simplify things suppose that you wish to stop Google’s bots. Here’s how the code could appear as:

User-agent: Googlebot

Disallow: /

In another scenario, you might not wish bots to access a specific document or folder on your site. In this case, you would not want bots to access the wp-admin folder or wp-login.php. These are the kinds of commands you’d apply:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-login.php

When you type “*” in the user-agent command, you can apply this principle to every bot.

In another case, suppose that you wish bots to access a specific document in a folder which is not allowed. This is what you’d do:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Let’s say you wanted to use certain guidelines for certain bots, but not others. Therefore, you’ll need two rules. The first one applies to all bots. While the second is only applicable specifically to Google bots.

User-agent: *

Disallow: /wp-admin/

User-agent: Googlebot

Disallow: /

Do we need to modify the robots txt file?

The casual users of WordPress don’t require edit robot.txt. However, that could modify if a specific bot is making a mess or if you have to tell which search engines interact with specific WordPress themes or plugins, or possibly even dependent on the web hosting provider you use.